Design Matrix Regression Python

For simple linear regression one can just write a linear mxc function and call this estimator. Create the numeric-only design matrix X.

Linear Regression From Scratch Using Python And Its Time Complexity Part I By Hongnan Gao Analytics Vidhya Medium

Except thats not what StatsModels OLS fit.

Design matrix regression python. I see that there is some regression support within Pandas but since I have my own customised regression routines I am really interested in the construction of the design matrix a 2d numpy array or matrix from heterogeneous data with support for mapping back and fort between columns of the numpy object and the Pandas DataFrame from which it. A regression model which is a linear combination of the explanatory variables may therefore be represented via matrix multiplication as where X is the design matrix is a vector of the models coefficients one. Here is the code I have so far.

Contribute to dpoteraPython-Linear-Regression development by creating an account on GitHub. 21 Residuals The vector of residuals e is just e y x b 42 Using the hat matrix e y Hy I H. In mathematical terms suppose the dependent.

The algorithm learns from those examples and their corresponding answers labels and then uses that to classify new examples. The fact that we can use the same approach with logistic regression as in case of linear regression is a big advantage of sklearn. X nparange0 n 12n Y X parameter nprandomrandn1 return X Y I am not entirely sure if my X design matrix variable is correct.

For example you can set the test size to 025 and therefore the model testing will be based on 25. If our input was D-dimensional before we will now fit D1 weights w0. From content import regularized_least_squares.

Lets take a look to see how we could go about implementing Linear Regression from scratch using basic numpy functions. Fit the logistic regression model. One important matrix that appears in many formulas is the so-called hat matrix H XXX-1X since it puts the hat on Y.

First have to re-create the design matrix used in the regression since StatsModels does not provide this. Contribute to dpoteraPython-Linear-Regression development by creating an account on GitHub. It is a bit more convoluted to prove that any idempotent matrix is the projection matrix for some subspace but thats also true.

Any design matrix X that contains these three columns will have a noninvertible moment matrix and attempting a linear regression will fail. The original design matrix X. Linear regression in python Chapter 2.

It returns an array of function parameters for which the least-square measure is minimized and the associated covariance matrix. Set up the model. To begin we import the following libraries.

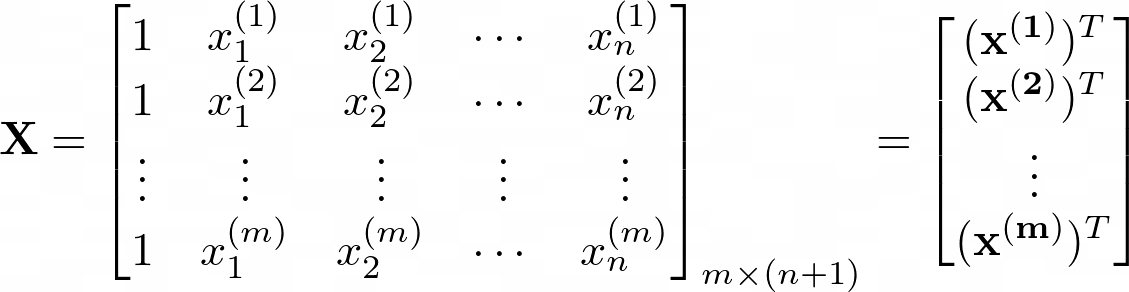

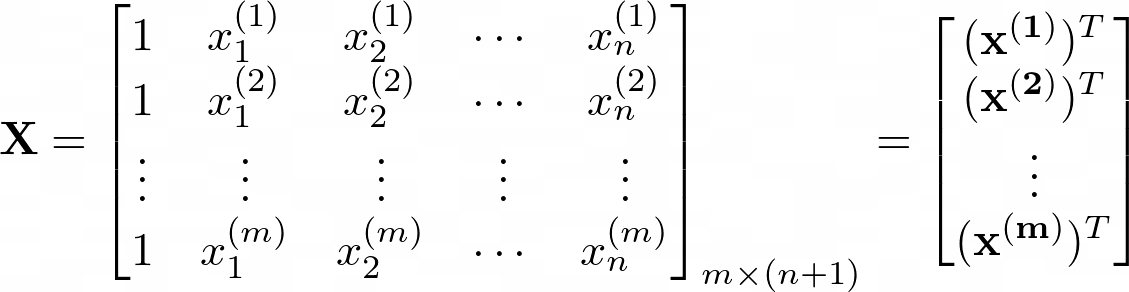

In a nutshell it is a matrix usually denoted of size where is the number of observations and is the number of parameters to be estimated. Recommended to use the package Patsy to do this because it will be easier and its the package that StatsModels uses itself so the same formula can be entered to get the same design matrix. Where y is a vector of the response variable X is the matrix of our feature variables sometimes called the design matrix and β is a vector of parameters that we want to estimate.

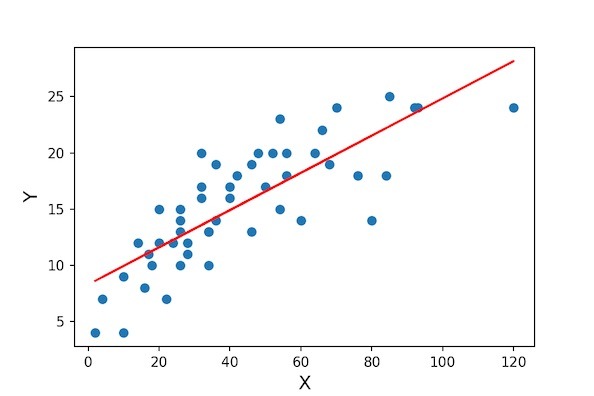

The first of these is always multiplied by one and so is actually the bias weight b while the remaining weights give the regression weights for our original design matrix. Frank Wood fwoodstatcolumbiaedu Linear Regression Models Lecture 11 Slide 20 Hat Matrix Puts hat on Y We can also directly express the fitted values in terms of only the X and Y matrices and we can further define H the hat matrix The hat matrix plans an important role in diagnostics for regression analysis. Linear Regression fit with Matrix Multiplication in Python We can clearly see that our estimates nicely shows the linear relationship between X and Y.

The same approach applies to other models too so it is very easy to experiment with different models. A parameter for the intercept and a parameter for the slope. The design matrix is defined to be a matrix such that the j th column of the i th row of represents the value of the j th variable associated with the i th object.

Y βX ϵ y β X ϵ. This might indicate that there are strong multicollinearity problems or that the design matrix is singular. Further Matrix Results for Multiple Linear Regression.

From content import model_selection. The typical model formulation is. Import numpy as np def linRegn parameter.

Goes without saying that it works for multi-variate regression too. Write H on board. Recently I was asked about the design matrix or model matrix for a regression model and why it is important.

Let us double check our estimates of linear regression model parameters by matrix multiplication using scikit-learns LinearRegression model function. Now set the independent variables represented as X and the dependent variable represented as y. Matrix notation applies to other regression topics including fitted values residuals sums of squares and inferences about regression parameters.

Recall from my previous post that linear regression typically takes the form. I am trying to create a design matrix using NumPy for a linear regression function. From sklearndatasets import make_regression from matplotlib import pyplot as plt import numpy as np from sklearnlinear_model import LinearRegression.

The link test is once again non-significant. Regression model class We are now going to code the class. While acs_k3 does have a positive relationship.

We will see later how to read o the dimension of the subspace from the properties of its projection matrix. Create the logistic regression in Python. The design matrix of dimensions NxD is built as such with each x being a data point from our training set.

Now were ready to start. How would I add a column of 1s to it. From content import design_matrix.

Logistic Regression is a supervised Machine Learning algorithm which means the data provided for training is labeled ie answers are already provided in the training set. ϵ ϵ is. From content import least_squares.

X df gmat gpawork_experience y df admitted Then apply train_test_split. In simple linear regression ie. Note that after including meals and full the coefficient for class size is no longer significant.

Linear Regression Python Implementation Geeksforgeeks

Linear Regression Using Matrix Multiplication In Python Using Numpy Python And R Tips

Design Matrices For Linear Models Clearly Explained Youtube

Design Matrix For Regression Explained Daniel Oehm Gradient Descending

Post a Comment for "Design Matrix Regression Python"